Iptables is a Linux-based firewall utility that allows system administrators to configure rules and filters for network traffic. It works by examining each packet that passes through the system and making decisions based on rules defined by the administrator.

In the context of iptables, tables are organizational units that contain chains and rules. There are five built-in tables in iptables:

- filter – This is the default table and is used for packet filtering.

- nat – This table is used for network address translation (NAT).

- mangle – This table is used for specialized packet alteration.

- raw – This table is used for configuring exemptions from connection tracking in combination with the NOTRACK target.

- security – This table is used for Mandatory Access Control (MAC) networking rules.

In iptables, a chain is a sequence of rules that are applied to each packet passing through a particular table. Each table contains several built-in chains, and these chains can also have user-defined chains.

Each table contains several built-in chains and can also have user-defined chains. The chains contain rules that are used to determine what happens to packets as they pass through the firewall.

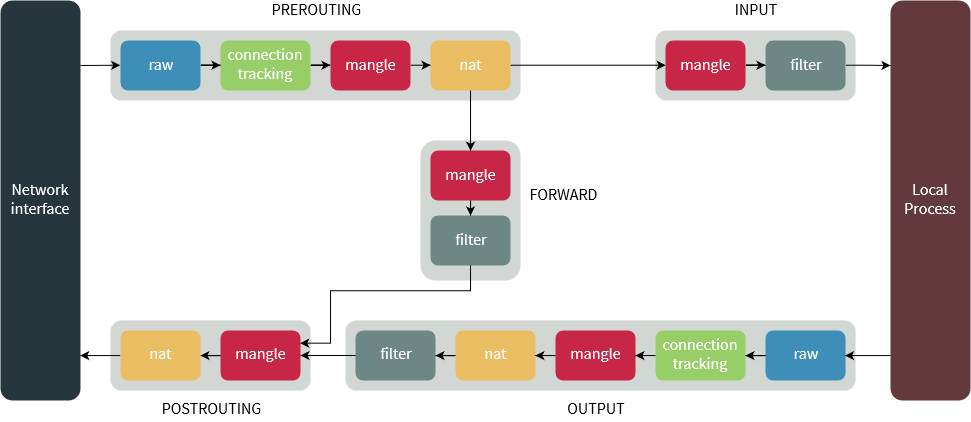

The name “chain” comes from the way that iptables processes packets. When a packet arrives at the firewall, it is first matched against the rules in the PREROUTING chain. If the packet matches a rule in that chain, it is processed according to the action specified in the rule. If the packet is not matched by any rules in the PREROUTING chain, it is then passed to the INPUT chain, where it is again matched against each rule.

This process continues through the FORWARD, OUTPUT, and POSTROUTING chains until the packet is either accepted, rejected, or dropped. Because each chain is a sequence of rules that are processed in order, it is called a “chain.”

In iptables, there are five types of chains. Not all of the five chains are present in all the tables. The PREROUTING, INPUT, and FORWARD chains are present in the “filter” and “raw” tables. The OUTPUT and POSTROUTING chains are present in the “filter”, “nat”, and “mangle” tables.

The “security” table, which is used for Mandatory Access Control (MAC) networking rules, does not have the PREROUTING, POSTROUTING, or OUTPUT chains. Instead, it has inbound and outbound chains for each network interface.

Each of these chains has a default policy that can be set to either ACCEPT, DROP, or REJECT. ACCEPT means that the packet is allowed through, DROP means that the packet is silently discarded, and REJECT means that the packet is discarded and the sender is notified.

System administrators can create custom rules that match specific criteria, such as the source or destination IP address, the protocol used, or the port number.

“mangle” is a table that is used to alter or mark packets in various ways. The mangle table provides a way to modify packet headers in ways that other tables cannot, such as changing the Time To Live (TTL) value or the Type of Service (TOS) field.