“The attention mechanism allows the model to create the context vector as a weighted sum of the hidden states of the encoder RNN at each previous timestamp.”

“Transformer is a type of model based entirely on attention, and does not require recurrent or convolutional layers”

Context vector is the output of the Encoder in an Encoder-Decoder network (EDN). EDNs struggle to retain all the required information for the decoder to accurately decode. Attention is a mechanism to solve this problem.

“Attention mechanisms let a model directly look at, and draw from, the state at any earlier point in the sentence. The attention layer can access all previous states and weighs them according to some learned measure of relevancy to the current token, providing sharper information about far-away relevant tokens.”

GPT: Generative Pre-Trained Transformer. Unlike BERT, it is generative and not geared to comprehension, translation or summarization tasks, but instead writing or generative tasks. It uses unsupervised learning to train a deep neural network with a seq2seq model. It does not use reinforcement learning (feedback from environment) or supervised learning. It uses “masked self-attention” to predict the next text during training on its dataset.

The term “generative” is used to emphasize GPT’s ability to generate new, original text, rather than just processing or analyzing text that already exists. A generative model is a type of machine learning model that is trained to produce data, such as text, images, or music, that is similar to the data it was trained on. GPT is a generative model because it is trained on a large corpus of text data and can then generate new text that is similar to the text in its training data. This allows GPT to produce human-like text on a wide range of topics, which can be useful for a variety of applications, such as language translation, text summarization, and question answering.

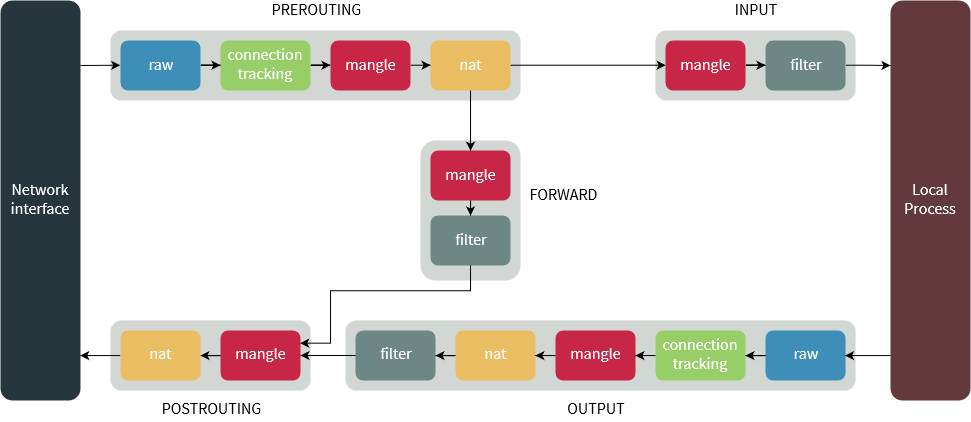

A “transformer” is a type of neural network architecture that was introduced in 2017. It is a deep learning model that is used for natural language processing tasks, such as language translation and text summarization. A transformer consists of two main components: an encoder, which processes the input text, and a decoder, which generates the output text. The encoder and decoder are connected by a series of attention mechanisms, which allow the model to focus on different parts of the input text as it generates the output. This architecture allows the model to process input text in a parallel, rather than sequential, manner, which makes it more efficient and effective than previous models. The transformer architecture has been widely adopted in natural language processing and has been shown to be highly effective for many tasks.

In a transformer, the “attention” mechanism allows the model to focus on different parts of the input text at different times as it generates the output text. This is different from previous neural network models, which processed the input text sequentially, one word at a time. The attention mechanism in a transformer works by calculating a weight for each word in the input text. This weight represents the importance of that word in the context of the current output word that the model is generating. The model then uses these weights to decide which words in the input text to focus on as it generates the output. This allows the model to selectively focus on the most relevant words in the input text.

https://en.m.wikipedia.org/wiki/Transformer_(machine_learning_model) was initially released June 2017 by Google Brain team.

GPT was released June 2018 by OpenAI.

BERT was released Oct 2018 by Google.

GPT-2 was announced Feb 2019 by OpenAI, trained on 40GB of text.

GPT-3 was introduced May 2020 and in beta testing in July 2020. Trained on 10x the data, or 400GB.

BERT is a response to GPT and GPT-2 is in turn a response to BERT.

This attention concept looks akin to a fourier or laplace transform which encodes the entire input signal in a lossless manner in a way that allows sections or bands of it to be referred to later. Although implemented differently it’s a way to keep track of and refer to global state.

AutoML and Transformer – http://ai.googleblog.com/2019/06/applying-automl-to-transformer.html

BERT and GPT are both based on the Transformer ideas. BERT is bidirectional and better at ccomprehending meaning from the whole sentence/phrase whereas GPT is better at generating text.

https://jalammar.github.io/illustrated-transformer/

Bahdanau, 2014 introduced the concept of Attention https://arxiv.org/abs/1409.0473

“The most important distinguishing feature of this approach from the basic encoder–decoder is that it does not attempt to encode a whole input sentence into a single fixed-length vector. Instead, it encodes the input sentence into a sequence of vectors and chooses a subset of these vectors adaptively while decoding the translation. This frees a neural translation model from having to squash all the information of a source sentence, regardless of its length, into a fixed-length vector. We show this allows a model to cope better with long sentences.”

This description makes it more like a wavelet transform, that does auto-correleations of a signal at different levels of granularity to make sense of it.

Conceptual progression

- Input -> Encoder -> Decoder -> Output

- Encoder maintains Hidden States to parse/grok the input. These are vectors. Once it goes through the input, it passes the final Hidden State, called the Context forward to the Decoder.

- This Context is the bottleneck in the operation of the Decoder.

- The Attention concept introduced by Bahdanau and others was to overcome the bottleneck in the Context

- With Attention the entire set of intermediate Hidden states is passed on to the Decoder, not just the final Context.

- The Decoder does a couple additional steps than before. a) it assigns a score assigned to each Hidden state b) it multiplies the Hidden state with the score. This set of scored vectors are then passed on to the Decoder to produce the Output.