Amazon SageMaker HyperPod is a new infrastructure designed specifically for distributed training at scale. It offers a purpose-built, high-performance environment that accelerates the training of large machine learning models by optimizing resource allocation, reducing communication overhead, and providing seamless scaling. HyperPod integrates with SageMaker to simplify complex training workflows, making it easier for users to efficiently train foundation models and other large-scale ML workloads. This innovation supports faster iteration and development of AI models. https://aws.amazon.com/sagemaker/hyperpod , https://aws.amazon.com/blogs/machine-learning/introducing-amazon-sagemaker-hyperpod-to-train-foundation-models-at-scale

Perplexity, a generative AI startup, improved its model training speed by 40% using Amazon SageMaker HyperPod on AWS. By leveraging advanced distributed training capabilities and EC2 instances, Perplexity optimized its model training and inference processes. This allowed the company to efficiently handle over 100,000 queries per hour with low latency and high throughput, enhancing user experiences and enabling rapid iteration in AI development. https://aws.amazon.com/solutions/case-studies/perplexity-case-study

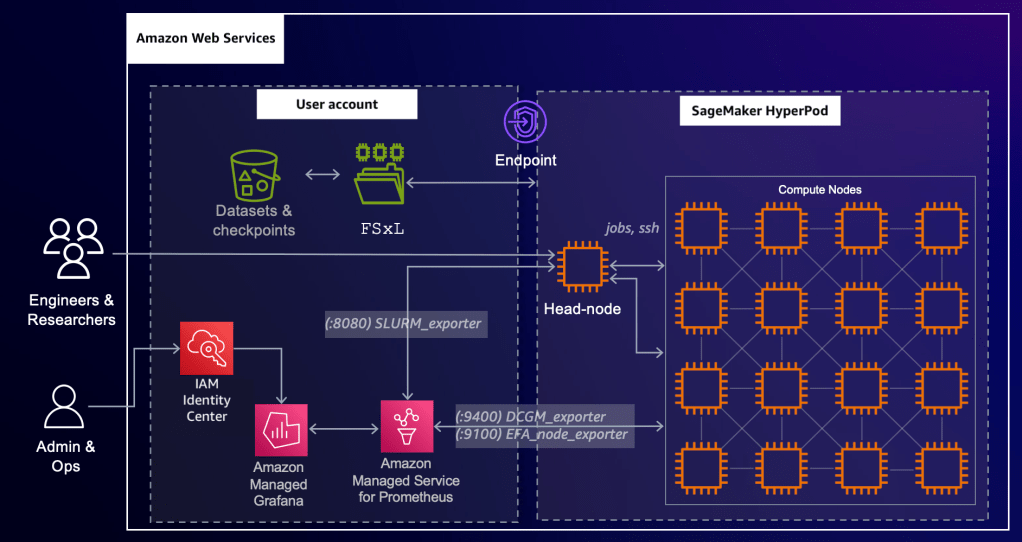

https://docs.aws.amazon.com/sagemaker/latest/dg/sagemaker-hyperpod-cluster-observability.html